Agentic Workflow

by Vuong NgoOptimise Cost and Speed of Agentic Workflow - Reversed Engineering Approach

Overview

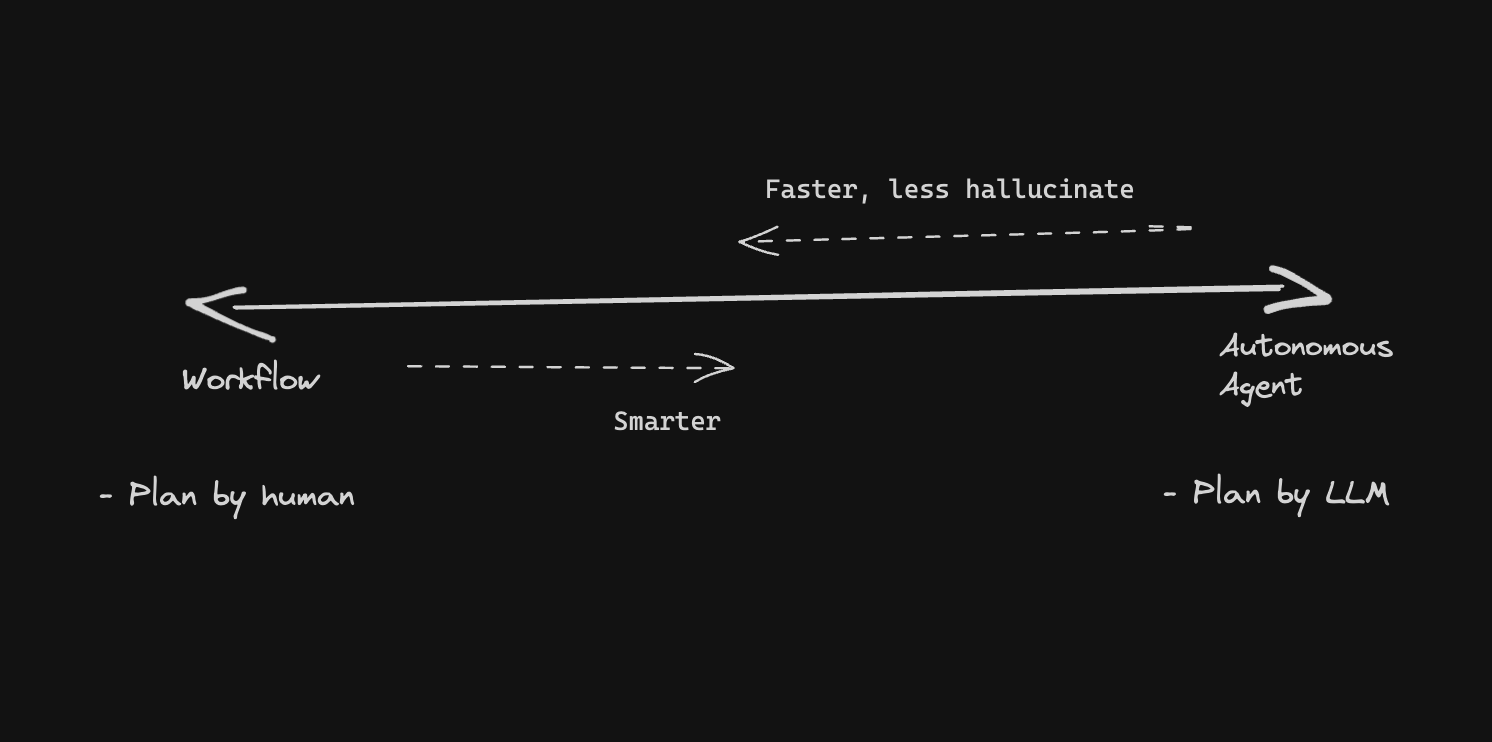

The article explores automating the "Dependencies Upgrade Process" using CrewAI and Langgraph, demonstrating how autonomous agents can streamline tedious engineering tasks. The key focus is on optimizing agentic workflows to reduce costs, improve speed, and minimize hallucinations.

Key Insights

Workflow Optimization Strategies

- Simplify Communication Flow

- Reduce Redundancy

- Memory Optimization

Performance Improvements

- Initial approach: ~$2 per run, 3-minute execution

- Optimized approach: ~16 cents, 1-minute execution

Essential Components for Production

- Evaluation

- Observability

- Human-in-the-Loop

Conclusion

The article emphasizes viewing agentic systems as empowering tools that enhance human capabilities, not replacements. The author calls for careful consideration of social impact and continued collaborative development.